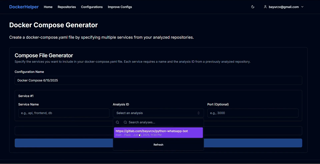

DockerHelper

DevOps, AI, Containerization

Project details

Project Description:

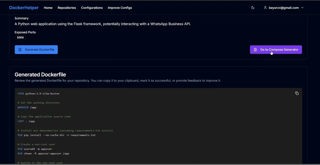

DockerHelper is an intelligent backend API service designed to automate the creation of Dockerfile and docker-compose.yaml configurations by analyzing Git repositories and leveraging Large Language Models. The tool addresses the time-consuming and often frustrating process of manual Docker configuration, providing developers with an AI-powered co-pilot that generates optimized, production-ready containerization setups instantly.

Problem Statement:

Manually crafting Dockerfile and docker-compose.yaml configurations is repetitive, error-prone, and requires deep knowledge of containerization best practices. Developers often struggle with optimizing layers, ensuring security, and adapting configurations to different project structures. This process slows down development cycles and introduces potential deployment issues that could be avoided with intelligent automation.

Solution and Implementation:

-

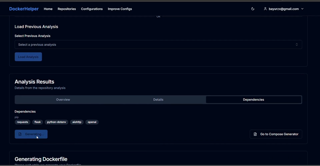

Repository Analysis:

DockerHelper ingests public or private repository URLs, clones them using GitPython, and analyzes file structure, dependencies, and primary language/framework to understand project requirements. -

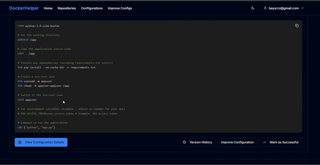

LLM-Powered Generation:

Key analysis data is sent to Large Language Models (OpenAI GPT or Google Gemini) to intelligently generate appropriate Dockerfile content and docker-compose.yaml configurations based on project needs and containerization best practices. -

Retrieval-Augmented Generation (RAG):

Implements a learning system where user-verified successful configurations are embedded using Sentence Transformers and stored in ChromaDB vector store. Future generations retrieve similar successful examples to augment LLM prompts for higher accuracy. -

Iterative Refinement:

Users can provide natural language feedback or error messages to improve existing configurations. The system maintains full version history, allowing users to revert to previous states and track improvements over time. -

Multi-Provider Authentication:

Secure OAuth 2.0 integration with Google, GitHub, and GitLab enables private repository analysis while maintaining user security and access control. -

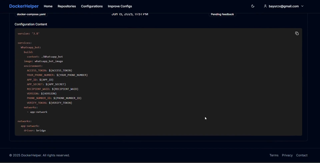

Containerized Architecture:

The entire application is containerized using Docker with docker-compose.yaml orchestrating backend, PostgreSQL, Nginx, and frontend services, with persistent data managed through Docker volumes. -

Modern Frontend:

Developed as a Single Page Application using Next.js with TypeScript, styled with Tailwind CSS and Radix UI components for a clean, responsive user experience.

Outcome of the Project:

DockerHelper successfully addresses core developer pain points with substantial benefits:

- Dramatically reduces time spent on Docker configuration from hours to seconds

- Improves configuration quality through LLM best practices and learned examples

- Enables iterative improvement through natural language feedback

- Supports complex project structures across multiple languages and frameworks

- Provides secure access to private repositories through OAuth integration

- Maintains configuration history for auditability and rollback capability

- Continuously improves through RAG-based learning from user success patterns

Technologies Used:

Backend & Core:

- Framework: FastAPI (Python)

- Database: PostgreSQL with SQLAlchemy ORM

- LLM Integration: OpenAI GPT, Google Gemini

- Vector Database: ChromaDB with Sentence Transformers

- Authentication: JWT, OAuth 2.0 (Google, GitHub, GitLab)

- Repository Analysis: GitPython

Frontend:

- Framework: Next.js with TypeScript

- Styling: Tailwind CSS

- UI Components: Radix UI

Infrastructure & Deployment:

- Containerization: Docker, Docker Compose

- Web Server: Nginx

- Database Migrations: Alembic

Conclusion

DockerHelper represents a significant advancement in developer tooling by combining AI-powered analysis with practical containerization expertise. The system not only automates repetitive configuration tasks but also learns and improves over time, establishing a new standard for intelligent development assistance in the containerization space.

Summary

DockerHelper is an AI-powered backend API that automates Docker configuration generation by analyzing Git repositories and leveraging Large Language Models. It features intelligent learning through RAG, multi-provider OAuth for private repos, and iterative refinement capabilities, significantly reducing development time while improving containerization quality across diverse project structures.

Services

6+

Years Experience

126

Completed Projects

114

Happy Customers

20+